The Relevance of Our Work

Given that we live in a world in which we are continually bombarded with information provided by our different sensory systems, such "multisensory integration" is a ubiquitous phenomenon. The utility of multisensory interactions is illustrated by the numerous studies from our lab and others that have highlighted the important role these processes play in altering our behaviors and shaping our perceptions. In addition, our lab (along with others) are beginning to highlight the important role altered multisensory function plays in clinical conditions such as autism and schizophrenia.

Impact Through Multidisciplinary Research

Ultimately, we are interested in providing a more complete understanding of how multisensory processes impact our behaviors and perceptions, in better elucidating the neural substrates for these interactions, and in understanding how multisensory processes develop and are influenced by sensory experience. We study these fundamental questions using a multidisciplinary set of approaches, including animal behavior, human psychophysics, neuroimaging (ERP and fMRI) and neurophysiological techniques. Along with our interest in the brain bases for multisensory processes under normal circumstances, we are also interested in examining how multisensory circuits are altered in an array of clinical conditions, including attention deficit hyperactivity disorder, autism spectrum disorder and developmental dyslexia.

At the Wallace Lab, we celebrate neurodiversity and foster an inclusive research environment where all individuals are valued and supported.

Our work advances understanding of sensory processing across diverse populations. We ensure accessibility, clear communication, and a welcoming space for participants, collaborators, and team members.

By embracing neurodiversity, we enhance scientific discovery and deepen our understanding of how individuals experience the world.

Our Research

Audiovisual motion processing

Adam Tiesman

My research examines human auditory and visual motion perception using psychophysics, computational modeling, and EEG. I investigate how motion cues are integrated in an audiovisual motion discrimination task, revealing both optimal and average cue combination strategies influenced by attention, modality, and stimulus statistics. My current work explores trial history effects, EEG correlates of motion perception, and motion processing in VR/AR environments. Through this, I aim to uncover the behavioral and neural mechanisms underlying natural motion perception.

Motor Stereotypies: Elucidating Brain-Behavior Relationships Across Sensory Landscapes

William Quackenbush

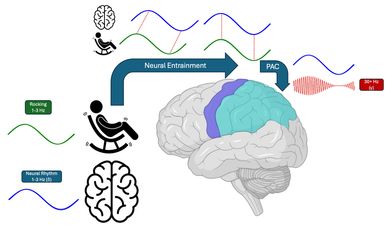

Motor stereotypies (STY) (e.g., body rocking, hand flapping) are a core feature of autism and appear more subtly in non-autistic individuals (e.g., leg bouncing). Though traditionally viewed as purposeless, personal accounts from both groups indicate STY serve a coping mechanism for sensory regulation, especially during environmental under/overstimulation. This study will use motion capture kinematics, motion classifier models, mobile EEG, and augmented reality to investigate shared neural mechanisms underlying STY sensory benefits in autistic and non-autistic children/adolescents, with findings providing a potential foundation for future improvement of sensory processing across populations.

Navigating the Near: Immersive Investigations of PPS in Autism

Hari Srinivasan

I investigate peripersonal space (PPS)—the dynamic, flexible "invisible bubble" around our bodies that defines our actionable space—and how it differs in autism. The novel research task I designed prioritizes ecological validity and accessibility, potentially including a wider profile of autistic participants. Using VR/AR, motion capture, physiological measures, and neuroimaging, I examine how sensory-motor integration shapes spatial perception and interaction. As an autistic researcher with ADHD, I am committed to bridging neuroscience with real-world applications - a deeper understanding of PPS can inform applications across education, employment, and daily life.

Cross-Modal Semantic Binding: Developmental Trajectories and Neural Mechanisms

Anna Machado

My research investigates how humans semantically bind visual and auditory objects across development, focusing on how these associations emerge and change over time. By constructing multimodal embeddings from behavioural and fMRI data, I aim to map the developmental trajectory of audiovisual integration and identify critical semantic binding windows where learning is most flexible.

Sensory Reliability, Perceptual Priors, and EEG Microstate Dynamics in Schizophrenia:

Sensory Reliability, Perceptual Priors, and EEG Microstate Dynamics in Schizophrenia:

Marlisa Shaw

My work focuses on understanding how reliable our senses are. I will test how well people detect sights and sounds and examine how much they rely on past expectations (“priors”) when sensory information is uncertain. I'll also investigate the role of priors in schizophrenia, exploring whether individuals with schizophrenia depend more on prior expectations and how this influences their perception. Finally, I'll use EEG to study how the brain adapts over time, examining how perceptual training alters neural dynamics and brain microstates.

Audio Localization in Cochlear Implant Patients

Sensory Reliability, Perceptual Priors, and EEG Microstate Dynamics in Schizophrenia:

Jordan Washecheck

Cochlear implant users often struggle with sound localization. My work focuses on better understanding these challenges and exploring strategies to improve localization skills. I will begin by developing an audio localization task in our immersive sensory environment to assess performance, followed by creating a virtual reality training paradigm to enhance localization abilities. Because cholinergic medications have been shown to improve auditory learning after implantation, I may also conduct a clinical trial using donepezil.

Metacognitive and Contextual Influences on Multisensory Processing

Harper Marshall

My work focuses on understanding how context shapes multisensory integration and memory in naturalistic environments. I will use the VR CAVE to develop immersive paradigms that model real-world audiovisual memory and examine how context influences integration. I will investigate how semantic congruence between objects and sounds (for example, a dog barking versus a dog meowing) affects the encoding and retrieval of audiovisual memories. I will also study how environmental background cues, such as being in a library versus on a busy street, impact multisensory memory. Finally, I will explore how emotional and mental states—including stress, affect, and cognitive load—modulate audiovisual integration and memory in realistic settings.

Brain-Inspired AI

David Tovar

The focus of my work lies in understanding intelligence, both artificial and natural, through the development of AI models that capture cognitive processes. In building these models, my aim is to gain mechanistic insights into cognitive disorders that have historically been difficult to understand. Given that human sensory processing spans multiple modalities, my work also investigates the integration of multimodal signals across senses while observing neural, behavioral, and physiological outputs.

Motion Processing in Virtual Reality

Motion Processing in Virtual Reality

Emily Garcia

My research investigates how humans perceive and discriminate visual motion signals within virtual environments using random dot kinematograms (RDKs) presented in VR. More specifically, I am assessing how the display modality—specifically comparing 3D VR to traditional 2D CRT displays—influences motion discrimination performance, testing whether stereoscopic depth enhances sensitivity to motion signals. I am also comparing motion discrimination performance across sensory conditions (visual-only vs. audiovisual) to assess the benefits of multisensory integration for motion perception. Ultimately, I aim to deepen our understanding of multisensory integration and motion processing in more ecologically valid, 3D virtual settings.

Multisensory Environments In Longitudinal Development Consortium Research

The mission of the MELD consortium is to detail multisensory development from birth until young adulthood using a series of tasks designed within naturalistic, immersive environments. The knowledge gained will be critical in furthering our understanding of sensory development and the higher-order cognitive abilities this development scaffolds.

The MELD consortium consists of four international sites: Vanderbilt University, Yale University, Italian Institute of Technology, and University Hospital Center and University of Lausanne.

Motion Classification of Stereotypies

Individuals with autism often exhibit stereotyped movements (e.g. hand-flapping). As part of a larger project investigating the potential utility of these stereotypies, we are working on pipelines to automatically classify marker-based movement data sets.

Virtual and Augmented Reality Investigations of Peripersonal Space

We are refining existing paradigms and developing a novel bubble-popping task to measure the extent and flexibility of peripersonal space. These are friendly for children and individuals with a variety of motor deficits, and will initially be used to test hypotheses about peripersonal space in individuals with autism and neurotypical.

Audiovisual Motion Processing

We are developing audio and audiovisual versions of the standard visual psychophysics tool known as a Random Dot Kinetogram (RDK). This allows us to test a number of hypotheses about multisensory integration when processing moving stimuli.

App-Based Multisensory Integration Experiments

A phone app for collecting multisensory psychophysical data using standard paradigms.

*link to app here:*

Speeded Reaction Time (SRT) to Auditory, Visual, & Audiovisual Stimulation

This study investigates SRT to unisensory and multisensory inputs to investigate the development of multisensory facilitation effects in children.

Active Passive Multisensory Perception Development

Movement can alter the perception of time in a multisensory environment. However, how the movement has an influence during development was not yet investigated comparing directly active and passive movement. Here, we evaluate how the temporal binding window is altered by active and passive movement. Haptic devices will be used with this goal.

Serial Dependence Orientation Tactile Perception in Blind Individuals

The aim of this research line is to investigate how blind individuals perceive tactile stimuli and to examine whether the phenomenon of serial dependence also influences the perception of touch. Through a series of studies, this research seeks to explore whether prior tactile experiences shape the perception of subsequent stimuli, similar to the effect observed in visual perception. By analyzing these interactions, we aim to better understand how the brain processes and integrates sensory information in the absence of vision, shedding light on the mechanisms underlying sensory compensation and multisensory integration.

Serial Dependence in Multisensory Processes

It is currently unknown how performance on preceding trials influence processing of current stimulus information, particularly under multisensory conditions where stimuli can partially “repeat” for one sensory modality and also be “novel” on a given trial for another sensory modality.

Linking Low-Level Multisensory Processes to Higher-Level Cognition

Much of our prior work has suggested there are links between low-level multisensory processes and higher-level cognitive (dys)functions. This remains to be firmly established, particularly in children. We have collected both SRT data as well as measures of cognitive abilities (working memory, fluid intelligence) to assess putative links. This project aims to fill a knowledge gap between data acquired in younger schoolchildren and data acquired in adults by acquiring data from older adolescents (aged 15-18). Identifying such links would open new pathways for screening and intervention strategies.

EEG Frequency Tagging Analysis Pipelines

EEG frequency tagging is a fast and efficient way to get high SNR in a short period of time. This is highly promising for use in pediatric populations. However, the analysis pipelines for this are heterogeneous and ill-defined. This project aims to generate a highly stable pipeline.

EEG Analysis Pipeline for Assessing Multisensory Processes in Children

A crucial element for the MELD consortium will be to establish a streamlined analysis pipeline for EEG data.

Cross-Modal Semantic Binding: Developmental Trajectories and Neural Mechanisms

Human cognition depends on the ability to integrate information from different sensory modalities into coherent semantic representations. While much is known about unimodal semantic processing, the mechanisms by which visual and auditory stimuli are integrated and how these processes evolve across developmental stages remain poorly understood. This project seeks to address these gaps by combining behavioral experiments, computational modeling, and neural validation to investigate cross-modal semantic binding across ages. By focusing on visual and auditory embeddings derived from behavioral tasks and validating these embeddings against neural data, this study aims to uncover how developmental plasticity influences the ability to form, sustain, and adapt semantic representations.

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.